kmeans_pp <- function(X, # data

K # number of centroids

) {

# sample initial centroid from distinct rows of X

X <- unique(as.matrix(X))

new_center_index <- sample(nrow(X), 1)

centers <- X[new_center_index,, drop=FALSE]

# let x be all observations not yet chosen as a centroid

X <- X[-new_center_index,, drop=FALSE]

if(K >= 2) {

# loop over remaining centroids

for(kk in 2:K) {

# calculate distances from all observations to all already chosen centroids

distances <- apply(X, 1, function(x) min(sum((x - centers)^2)))

# sample new centroid with probability proportional to squared Euclidean distance

probabilities <- distances/sum(distances)

new_center_index <- sample(nrow(X), 1, prob=probabilities)

# record the new centroid and remove it for the next iteration

centers <- rbind(centers, X[new_center_index,, drop=FALSE])

X <- X[-new_center_index,, drop=FALSE]

}

}

# add random jitter to distinguish non-unique centroids and return

centers[duplicated(centers)] <- jitter(centers[duplicated(centers)])

return(centers)

}8 Dissimilarity-based Cluster Analysis of Educational Data: A Comparative Tutorial using R

1 Introduction

Cluster analysis is a term used to describe a broad range of techniques which have the goal of uncovering groups of observations in a data set. The typical aim of cluster analysis is to categorise observations into groups in such a way that observations within the same group are in some sense more similar to each other, while being relatively dissimilar to those in other groups [1]. In other words, clustering methods uncover group structure in heterogeneous populations by identifying more homogeneous groupings of observations which may represent distinct, meaningful subpopulations. Using machine learning terminology, cluster analysis corresponds to unsupervised learning, whereby groups are identified by relying solely on the intrinsic characteristics (typically dissimilarities), without guidance from unavailable ground truth group labels. Indeed, foreknowledge of the fixed number of groups in a data set is characteristic of supervised learning and the distinct field of classification analysis.

It is important to note that there is no universally applicable definition of what constitutes a cluster [2, 3]. Indeed, in the absence of external information in the form of existing “true” group labels, different clustering methods can reveal different things about the same data. There are many different ways to cluster the same data set, and different methods may yield solutions with different assignments, or even differ in the number of groups they identify. Consequently, we present several cluster analysis algorithms in this chapter; namely, \(K\)-Means [4] in Section 8.3.1, a generalisation thereof, \(K\)-Medoids [5], in Section 8.3.1.2.3, and agglomerative hierarchical clustering [6] in Section 8.3.2. We apply each method in a comparative study in our tutorial using R [7], with applications to a data set about the participants from discussion forum of a massive open online course (MOOC) for teachers.

The clustering methods we review in this chapter are designed to find mutually exclusive, non-overlapping groups in a data set, i.e., they recover a hard partition whereby each observation belongs to one group only. This is in contrast to soft clustering approaches under which each observation is assigned a probability of belonging to each group. One such example of soft clustering is the model-based clustering paradigm, which is discussed in the later Chapter 9 [8]. While model-based clustering methods, as the name suggests, are underpinned by the assumption of generative probabilistic models [9], the more traditional dissimilarity-based methods on which this chapter is focused are purely algorithmic in nature and rely on heuristic criteria regarding the pairwise dissimilarities between objects.

Heuristic clustering algorithms can be further divided into two categories; partitional clustering (e.g., \(K\)-Means and \(K\)-Medoids) and hierarchical (of which we focus on the agglomerative variant). Broadly speaking, partitional clustering methods start with an initial grouping of observations and iteratively update the clustering until the “best” clustering is found, according to some notion of what defines the “best” clustering. Hierarchical clustering methods, on the other hand, is more sequential in nature; a tree-like structure of nested clusterings is built up via successive mergings of similar observations, according to a defined similarity metric. In our theoretical expositions and our applications in the R tutorial, we provide some guidelines both on how to choose certain method-specific settings to yield the best performance and how to determine the optimal clustering among a set of competing methods.

The remainder of this chapter proceeds as follows. In Section 8.2, we review relevant literature in which dissimilarity-based clustering methods have been applied in the realm of educational research. In Section 8.3, we describe the theoretical underpinnings of each method in turn and discuss relevant practical guidelines which should be followed to secure satisfactory performance from each method throughout. Within Section 8.4, we introduce the data set of our case study in Section 8.4.1 and give an overview of some required pre-processing steps in Section 12.4.3, and then present a tutorial using R for each clustering method presented in this chapter in Section 8.4.2, with a specific focus on identifying the optimal clustering solution in Section 8.4.2.4. Finally, we conclude with a discussion and some recommendations for related further readings in Section 8.5, with a particular focus on some limitations of dissimilarity-based clustering which are addressed by other frameworks in the broader field of cluster analysis.

2 Clustering in education: review of the literature

In education, clustering is among the oldest and most common analysis methods, predating the field of learning analytics and educational data mining by several decades. Such early adoption of clustering is due to the immense utility of cluster analysis in helping researchers to find patterns within data, which is a major pursuit of education research [10]. Interest was fueled by the increasing attention to heterogeneity and individual differences among students, their learning processes, and their approaches to learning [11, 12]. Finding such patterns or differences among students allows teachers and researchers to improve their understanding of the diversity of students and tailor their support to different needs [13]. Finding subgroups within cohorts of students is a hallmark of so-called “person-centered methods”, to which clustering belongs [8].

A person-centered approach stands in contrast to the variable-centered methods which consider that most students belong to a coherent homogeneous group with little or negligible differences [14]. Variable-centered methods assume that there is a common average pattern that represents all students, that the studied phenomenon has a common causal mechanism, and that the phenomenon evolves in the same way and results in similar outcomes amongst all students. These assumptions are largely understood to be unrealistic, “demonstrably false, and invalidated by a substantial body of uncontested scientific evidence” [15]. In fact, several analytical problems necessitate clustering, e.g., where opposing patterns exist [16]. For instance, in examining attitudes towards an educational intervention using variable-centered methods, we get an average that is simply the sum of negative and positive attitudes. If the majority of students have a positive attitude towards the proposed intervention —combined with a minority against— the final result will imply that students favour such intervention. The conclusions of this approach are not only wrong but also dangerous as it risks generalising solutions to groups to whom it may cause harm.

Therefore, clustering has become an essential method in all subfields of education (e.g., education psychology, education technology, and learning analytics) having operationalised almost all quantitative data types and been integrated with most of the existing methods [10, 17]. For instance, clustering became an essential step in sequence analysis to discover subsequences of data that can be understood as distinct approaches or strategies of students’ behavior [18]. Similar applications can be found in social network analysis data to identify collaborative roles, or in multimodal data analysis to identify moments of interest (e.g., synchrony). Similarly, clustering has been used with survey data to find attitudinal patterns or learning approaches to mention a few [17]. Furthermore, identifying patterns within students’ data is a prime educational interest in its own right and therefore, has been used extensively as a standalone analysis technique to identify subgroups of students who share similar interests, attitudes, behaviors, or background.

Clustering has been used in education psychology for decades to find patterns within self-reported questionnaire data. Early examples include the work of Beder and Valentine [19] who used responses of a motivational questionnaire to discover subgroups of students with different motivational attitudes. Similarly, Clément et al. [20] used the responses of a questionnaire that assessed anxiety and motivation towards learning English as a second language to find clusters which differentiated students according to their attitudes and motivation. Other examples include the work by Fernandez-Rio et al. [21] who used hierarchical clustering and \(K\)-Means to identify distinct student profiles according to their perception of the class climate. With the digitalisation of educational institutions, authors also sought to identify students profiles using admission data and learning records. For instance, Cahapin et al. [22] used several algorithms such as \(K\)-Means and agglomerative hierarchical clustering to identify patterns in students’ admission data.

The rise of online education opened many opportunities to investigate students’ behavior in learning management systems based on the trace log data that students leave behind them when working on online activities. An example is the work by Saqr et al. [23], who used \(K\)-Means to cluster in-service teachers’ approaches to learning in a MOOC according to their frequency of clicks on the available learning activities. Recently, research has placed a focus on the temporality of students’ activities, rather than the mere count. For this purpose, clustering has been integrated within sequence analysis to find subsequences which represent meaningful patterns of students behavior. For instance, Jovanovic et al. [24] used hierarchical clustering to identify distinct learning sequential patterns based on students’ online activities in a learning management system within a flipped classroom, and found a significant association between the use of certain learning sequences and learning outcomes. Using a similar approach, López-Pernas et al. [25] used hierarchical clustering to identify distinctive learning sequences in students’ use of the learning management system and an automated assessment tool for programming assignments. They also found an association between students’ activity patterns and their performance in the course final exam. Several other examples exist for using clustering to find patterns within sequence data [16, 26, 27].

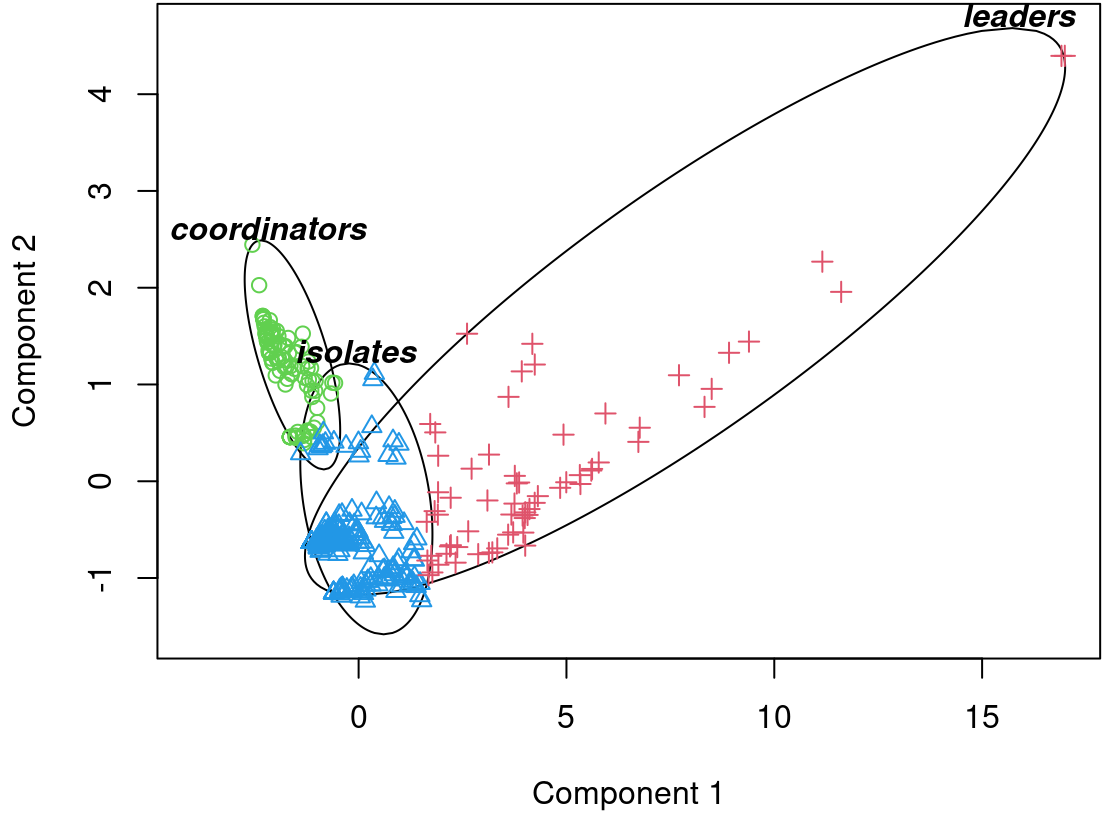

A growing application of clustering can be seen in the study of computer-supported collaborative learning. Saqr and López-Pernas [28] used cluster analysis to discover students with similar emergent roles based on their forum interaction patterns. Using the \(K\)-Means algorithm and students’ centrality measures in the collaboration network, they identified three roles: influencers, mediators, and isolates. Perera et al. [29] used \(K\)-Means to find distinct groups of similar teams and similar individual participating students according to their contributions in an online learning environment for software engineering education. They found that several clusters which shared some distinct contribution patterns were associated with more positive outcomes. Saqr and López-Pernas [30] analysed the temporal unfolding of students’ contributions to group discussions. They used hierarchical clustering to identify patterns of distinct students’ sequences of interactions that have a similar start and found a relationship between such patterns and student achievement.

An interesting application of clustering in education concerns the use of multimodal data. For instance, Vieira et al. [31] used \(K\)-Means clustering to find patterns in students’ presentation styles according to their voice, position, and posture data. Other innovative uses involve students’ use of educational games [32, 33], virtual reality [34], or artificial intelligence [35]. Though this chapter illustrates traditional dissimilarity-based clustering algorithms with applications using R to data on the centrality measures of the participants of a MOOC, along with related demographic characteristics, readers are also encouraged to read Chapter 9 [8] and Chapter 10 [18] in which further tutorials are presented and additional literature is reviewed, specifically in the contexts of clustering using the model-based clustering paradigm and clustering longitudinal sequence data, respectively. We now turn to an explication of the cluster analysis methodologies used in this chapter’s tutorial.

3 Clustering methodology

In this section, we describe some of the theory underpinning the clustering methods used in the later R tutorial in Section 8.4. We focus on some of the most widely-known heuristic dissimilarity-based clustering algorithms; namely, \(K\)-means, \(K\)-medoids, and agglomerative hierarchical clustering. In Section 8.3.1, we introduce \(K\)-Means clustering by describing the algorithm, outline the arguments to the relevant R function kmeans(), and discuss some of the main limitations and practical concerns researchers should be aware of in order to obtain the best performance when running \(K\)-Means. We also discuss the related \(K\)-Medoids algorithm and the associated function pam() in the cluster library [36] in R, and situate this method in the context of an extension to \(K\)-Means designed to overcome its reliance on squared Euclidean distances. In Section 8.3.2, we introduce agglomerative hierarchical clustering and the related R function hclust(), while outlining various choices available to practitioners and their implications. Though method-specific strategies of choosing the optimal number of clusters \(K\) are provided throughout Section 8.3.1 and Section 8.3.2, we offer a more detailed treatment of this issue in Section 8.3.3, particularly with regard to criteria that can guide the choice of clustering solution among multiple competing methodologies, and not only the choice of the number of clusters \(K\) for a given method.

3.1 \(K\)-Means

\(K\)-means is a widely-used clustering algorithm in learning analytics and indeed data analysis and machine learning more broadly. It is an unsupervised technique that seeks to divide a typically multivariate data set into some pre-specified number of clusters, based on the similarities between observations. More specifically, \(K\)-means aims to partition \(n\) objects \(\mathbf{x}_1,\ldots,\mathbf{x}_n\), each having measurements on \(j=1,\ldots,d\) strictly continuous covariates, into a set of \(K\) groups \(\mathcal{C}=\{C_1,\ldots,C_K\}\), where \(C_k\) is the set of \(n_k\) objects in cluster \(k\) and the number of groups \(K \le n\) is pre-specified by the practitioner and remains fixed. \(K\)-means constructs these partitions in such a way that the squared Euclidean distance between the row vector for observations in a given cluster and the centroid (i.e., mean vector) of the given cluster are smaller than the distances to the centroids of the remaining clusters. In other words, \(K\)-means aims to learn both the cluster centroids and the cluster assignments by minimising the within-cluster sums-of-squares (i.e., variances). Equivalently, this amounts to maximising the between-cluster sums-of-squares, thereby capturing heterogeneity in a data set by partitioning the observations into homogeneous groups. What follows is a brief technical description of the \(K\)-Means algorithm in Section 8.3.1.1, after which we describe some limitations of \(K\)-Means and discuss practical concerns to be aware of in order to optimise the performance of the method in Section 8.3.1.2. In particular, we present the widely-used \(K\)-Medoids extension in Section 8.3.1.2.3.

3.1.1 \(K\)-Means algorithm

The origins of \(K\)-Means are not so straightforward to summarise. The name was initially used by James MacQueen in 1967 [4]. However, the standard algorithm was first proposed by Stuart Lloyd in a Bell Labs technical report in 1957, which was later published as a journal article in 1982 [37]. In order to understand the ideas involved, we must first define some relevant notation. Let \(\mu_k^{(j)}\) denote the centroid value for the \(j\)-th variable in cluster \(C_k\) by \[\mu_k^{(j)} = \frac{1}{n_k}\sum_{\mathbf{x}_i \in C_k} x_{ij}\] and the complete centroid vector for cluster \(C_k\) by \[\boldsymbol{\mu}_k = \left(\mu_k^{(1)}, \ldots, \mu_k^{(p)}\right)^\top.\] These centroids therefore correspond to the arithmetic mean vector of the observations in cluster \(C_k\). Finding both these centroids \(\boldsymbol{\mu}_1,\ldots,\boldsymbol{\mu}_K\) and the clustering partition \(\mathcal{C}\) is computationally challenging and typically proceeds by iteratively alternating between allocating observations to clusters and then updating the centroid vectors. Formally, the objective is to minimise the total within-cluster sum-of-squares \[ \sum_{i=1}^n\sum_{k=1}^K z_{ik} \lVert \mathbf{x}_i - \boldsymbol{\mu}_k\rVert_2^2, \tag{1}\]

where \(\lVert\mathbf{x}_i - \boldsymbol{\mu}_k\rVert_2^2=\sum_{j=1}^p\left(x_{ij}-\mu_k^{(j)}\right)^2\) denotes the squared Euclidean distance to the centroid \(\boldsymbol{\mu}_k\) — such that \(\lVert\cdot\rVert_2\) denotes the \(\ell^2\) norm— and \(\mathbf{z}_i=(z_{i1},\ldots,z_{iK})^\top\) is a latent variable such that \(z_{ik}\) denotes the cluster membership of observation \(i\); \(z_{ik}=1\) if observation \(i\) belongs to cluster \(C_k\) and \(z_{ik}=0\) otherwise. This latent variable construction implies \(\sum_{i=1}^n z_{ik}=n_k\) and \(\sum_{k=1}^Kz_{ik}=1\), such that each observation belongs wholly to one cluster only. As the total variation in the data, which remains fixed, can be written as a sum of the total within-cluster sum-of-squares (TWCSS) from Equation 8.1 and the between-cluster sum-of-squares as follows

\[\sum_{i=1}^n \left(\mathbf{x}_i - \bar{\mathbf{x}}\right)^2=\sum_{i=1}^n\sum_{k=1}^K z_{ik} \lVert \mathbf{x}_i - \boldsymbol{\mu}_k\rVert_2^2 + \sum_{k=1}^Kn_k\lVert\boldsymbol{\mu}_k - \bar{\mathbf{x}}\rVert_2^2,\]

where \(\bar{\mathbf{x}}\) denotes the overall sample mean vector, minimising the TWCSS endeavours to ensure that observations in the same cluster are maximally similar to observations in the same cluster and maximally dissimilar to those in other clusters.

Using the notation just introduced, a generic \(K\)-means algorithm would proceed as follows:

Initialise: Select the number of desired clusters \(K\) and define \(K\) initial centroid vectors \(\boldsymbol{\mu}_1,\ldots,\boldsymbol{\mu}_K\).

Allocate: Find the optimal \(z_{ik}\) values that minimise the objective, holding the \(\mu_k\) values fixed.

Calculate the squared Euclidean distance between each observation and each centroid vector and allocate each object to the cluster corresponding to the initial centroid to which it is closest in terms of squared Euclidean distance. Looking at the objective function in Equation 8.1 closely and examining the contribution of observation \(i\), we need to choose the value of \(\mathbf{z}_i\) which minimises the expression \(\sum_{k=1}^K z_{ik} \lVert \mathbf{x}_i - \boldsymbol{\mu}_k\rVert_2^2\). This is achieved by setting \(z_{ik}=1\) for the value of \(k\) that has smallest \(\lVert \mathbf{x}_i - \boldsymbol{\mu}_k\rVert_2^2\) and setting \(z_{ik^\prime}=0\:\forall\:k^\prime\ne k\) everywhere else.

- Update: Find the optimal \(\boldsymbol{\mu}_k\) values that minimise the objective, holding the \(z_{ik}\) values fixed.

If we re-write the objective function in Equation 8.1 as \[\sum_{i=1}^n\sum_{k=1}^K\sum_{j=1}^p z_{ik} \left(x_{ij}-\mu_k^{(j)}\right)^2,\] we can use the fact that \[\frac{\partial}{\partial \mu_k^{(j)}}\left(x_{ij}-\mu_k^{(j)}\right)^2=-2\left(x_{ij}-\mu_k^{(j)}\right)\] to obtain \[\frac{\partial}{\partial \mu_k^{(j)}}\sum_{i=1}^n\sum_{k=1}^K\sum_{j=1}^p z_{ik} \left(x_{ij}-\mu_k^{(j)}\right)^2=-2\sum_{i=1}^nz_{ik}\left(x_{ij}-\mu_k^{(j)}\right).\] Solving this expression for \(\mu_k^{(j)}\) yields \[\mu_k^{(j)}=\frac{\sum_{i=1}^nz_{ik}x_{ij}}{\sum_{i=1}^nz_{ik}}=\frac{1}{n_k}\sum_{\mathbf{x}_i \in C_k} x_{ij}.\]

- Iterate: One full iteration of the algorithm consists of an allocation step (Step 2) and an update step (Step 3). Steps 2 and 3 are repeated until no objects can be moved between clusters, at which point the algorithm has converged to at least a local minimum of Equation 8.1.

Upon convergence, we obtain not only the estimated partition \(\mathcal{C}=\{C_1,\ldots,C_K\}\), indicating the cluster membership of each observation, but also the estimated cluster centroids \(\boldsymbol{\mu}_1,\ldots,\boldsymbol{\mu}_K\) which act as cluster prototypes, efficiently summarising the characteristics of each cluster. The algorithm just described is just one standard variant of \(K\)-Means. there have been several algorithms proposed for the same objective which derive their names from the author who proposed them; namely MacQueen [4], Lloyd [37], Forgy [38], and Hartigan and Wong [39]. They differ in some subtle ways, particularly with regard to how initial centroids in Step 1 are chosen and whether Steps 3 and 4 are applied to all \(n\) observations simultaneously or whether allocations and centroids are updated for each observation one-by-one (i.e., some variants iterate over Step 2 and Step 3 in a loop over \(i=1,\ldots,n\)). Without going into further details, we note that the default option for kmeans() in R uses the option algorithm="Hartigan-Wong" and this is what we will henceforth adopt throughout.

3.1.2 \(K\)-Means limitations and practical concerns

Though \(K\)-Means is a useful tool in many application contexts due to its conceptual and computational simplicity —so ubiquitous, in fact, that the kmeans() function in R is available without loading any additional libraries— it suffers from numerous limitations and some care is required in order to obtain reasonable results. We now discuss some of the main limitations in turn, but note that each is addressed explicitly throughout the \(K\)-Means application portion of the R tutorial in Section 8.4.2.1. The concerns relate to choosing \(K\) (Section 8.3.1.2.1), choosing initial centroids \(\boldsymbol{\mu}_1,\ldots,\boldsymbol{\mu}_K\) (Section 8.3.1.2.2), and relaxing the reliance on squared Euclidean distances with the more general \(K\)-Medoids method (Section 8.3.1.2.3).

3.1.2.1 Fixed \(K\) and the elbow method

The first major drawback is that the number of clusters \(K\) must be pre-specified. This is a key input parameter: if \(K\) is too low, dissimilar observations will be wrongly grouped together; if \(K\) is too large, observations will be partitioned into many small, similar clusters which may not be meaningfully different. Choosing the optimal \(K\) necessitates running the algorithm at various fixed values of \(K\) and finding the single value of \(K\) which best balances interpretability, parsimony, and fit quality. Fit quality is measured by the TWCSS, i.e., the objective function in Equation 8.1. Increasing \(K\) indefinitely will cause the TWCSS to decrease indefinitely, but this is not what we want. Instead, we seek a \(K\) value beyond which the decrease in TWCSS is minimal, in order to yield a parsimonious solution with a reasonable number of clusters to interpret, without overfitting the data or merely subdividing the actual groups. Thus, a commonly used heuristic graphical method for determining the optimal \(K\) value is to plot a range of \(K\) values against the corresponding obtained TWCSS values and look for an “elbow” or kink in the resulting curve. Such a plot will guide the choice of \(K\) in the \(K\)-Means portion of R tutorial which follows in Section 8.4.2.1.

3.1.2.2 Initialisation and \(K\)-Means

An especially pertinent limitation which must be highlighted is that \(K\)-Means is liable to converge to sub-optimal local minima, i.e., it is not guaranteed to converge to the global minimum of the objective function in Equation 8.1. Many practitioners have observed that the performance of \(K\)-Means is particularly sensitive to a good choice of initial cluster centroids in Step 1. Indeed, as different initial settings can lead to different clusterings of the same data, good starting values are vital to the success of the \(K\)-Means algorithm. Typically, \(K\) random vectors are used to define the initial \(\boldsymbol{\mu}_1,\ldots,\boldsymbol{\mu}_K\) centroids. One means of mitigating (but not completely remedying) the problem is to run \(K\)-Means with a suitably large number of random starting values and choose the solution associated with the set of initial centroids which minimise the TWCSS criterion.

In order to contextualise this issue, it is prudent to first describe some of the main arguments to the kmeans() R function. The following list is a non-exhaustive list of the available arguments to kmeans():

x: a numeric matrix of data or adata.framewith all numeric columns.centers: either the number of clusters \(K\) or a set of \(K\) initial (distinct) cluster centroids.nstart: ifcentersis specified as a number, this represents the number of random sets of \(K\) initial centroids with which to run the algorithm.iter.max: the maximum number of allocation/update cycles allowed per set of initial centroids.

The arguments nstart and iter.max have default values of 1 and 10, respectively. Thus, a user running kmeans() with centers specified as a number will, by default, only use one random set of initial centroids, to which the results are liable to be highly sensitive, and will have the algorithm terminate after just ten iterations, regardless of whether convergence was achieved. It would seem be an improvement, therefore, to increase the values of nstart and iter.max from these defaults. Fortunately, the function automatically returns the single optimal solution according to the random initialisation which yields the lowest TWCSS when nstart exceeds 1.

Though this will generally lead to better results, this approach can be computationally onerous if the number of observations \(n\), number of features \(d\), or size of the range of fixed \(K\) values under consideration is large. An alternative strategy, which greatly reduces the computational burden and the sensitivity of the final solution to the initial choices of \(\boldsymbol{\mu}_1,\ldots,\boldsymbol{\mu}_k\) is to choose a suitable set of informed starting values in a data-driven fashion. To this end, the so-called \(K\)-Means algorithm was proposed [40] in order to improve the performance of \(K\)-Means by replacing Step 1 with an iterative distance-weighting scheme to select the initial cluster centroids. Though there is still randomness inherent in \(K\)-Means, this initialisation technique ensures that the initial centroids are well spread out across the data space, which increases the likelihood of converging to the true global minimum. The \(K\)-Means algorithm works as follows:

Choose an initial centroid uniformly at random from the rows of the data set.

For each observation not yet chosen as a centroid, compute \(D^2(\mathbf{x}_i)\), which represents the squared Euclidean distance between \(\mathbf{x}_i\) and the nearest centroid that has already been chosen.

Randomly sample new observation as a new centroid vector with probability proportional to \(D^2(\mathbf{x}_i)\).

Repeat Steps B and C until \(K\) centroids have been chosen. If any of the chosen initial centroids are not distinct, add a small amount of random jitter to distinguish the non-unique centroids.

Proceed as per Steps 2–4 of the traditional \(K\)-Means algorithm (or one of its variants).

Although these steps take extra time, \(K\)-Means itself tends to converge very quickly thereafter and thus \(K\)-Means actually reduces the computational burden. A manual implementation of the \(K\)-Means is provided by the function kmeans_pp() below. Its output can be used as the centers argument when running kmeans().

However, it should be noted that there is still inherent randomness in \(K\)-Means —note the use of sample() in lines 7 and 22— and the algorithm is liable to produce different initial centroids in different runs on the same data. In effect, \(K\)-Means does not remove the burden of random initialisation; it is merely a way to have more informed random initialisations. Thus, it would be prudent to run \(K\)-Means with \(K\)-Means initialisation and select the solution which minimises the TWCSS, to transfer the burden of requiring multiple runs of \(K\)-Means with random starting values to fewer runs of \(K\)-Means followed by \(K\)-Means with more informed starting values. We adopt this strategy in the later R tutorial in Section 8.4.2.1.

3.1.2.3 \(K\)-Medoids and other distances

The \(K\)-Means objective function in Equation 8.1 explicitly relies on squared Euclidean distances and requires all features in the data set to be strictly continuous. An artefact of this distance measure is that it is generally recommended to standardise all features of have a mean of zero and unit variance prior to running \(K\)-Means. In general, standardisation is advisable if the values are of incomparable units (e.g., height in inches and weight in kilogram). More specifically for \(K\)-Means, it is desirable to ensure all features have comparable variances to avoid having variables with higher magnitudes and variances dominate the distance calculation and have an undue prominence on the clustering partition obtained. While we employ such normalisation to the data used in our R tutorial when applying some pre-processing steps in Section 12.4.3, we note that this is not sufficient to overcome all shortcomings of relying on squared Euclidean distances.

For these reasons and more, \(K\)-Medoids —otherwise known as partitioning around medoids (PAM)— was proposed as an extension to \(K\)-Means which allows using any alternative dissimilarity measure [5]. The \(K\)-Medoids objective function is given by \[\sum_{i=1}^n\sum_{k=1}^K z_{ik} d\left(\mathbf{x}_i, \boldsymbol{\psi}_k\right),\] where \(d\left(\mathbf{x}_i, \boldsymbol{\psi}_k\right)\) can be any distance measure rather than squared Euclidean and \(\boldsymbol{\psi}_k\) is used in place of the mean vector \(\boldsymbol{\mu}_k\). The PAM algorithm works in much the same fashion as \(K\)-Means, alternating between an allocation step which assigns each observation to the cluster with the closest \(\boldsymbol{\psi}_k\) (according to the specified distance measure) and an update step which minimises the within-cluster total distance (WCTD). Notably, when minimising with respect to \(\boldsymbol{\psi}_k\), the notion of a cluster centroid \(\boldsymbol{\mu}_k\) is redefined as a cluster medoid \(\boldsymbol{\psi}_k\), which is selected among the rows of the observed data set, i.e., the medoid is the observation \(\mathbf{x}_i\) from which the distance to all other observations currently allocated to the same cluster, according to the specified distance measure, is minimised. Similar to \(K\)-Means, the medoids obtained at convergence again enable straightforward characterisation of a “typical” observation from each cluster and the elbow method from Section 8.3.1.2.1 can be adapted to guide the choice of \(K\) in \(K\)-Medoids by plotting a range of candidate \(K\) values against the within-cluster total distance.

This reformulation has three main advantages. Firstly, the distance \(d\left(\mathbf{x}_i, \boldsymbol{\psi}_k\right)\) is not squared, which diminishes the influence of outliers. As \(K\)-Means relies on squared Euclidean distances, which inflates the distances of atypical observations, and defines centroids as means, it is not robust to outliers. Secondly, by defining the medoids as observed rows of the data, rather than finding the value of \(\boldsymbol{\psi}_k\) that minimises in general, which could potentially be difficult to estimate for complex data types or particularly sophisticated dissimilarity measures, the algorithm can be much more computationally efficient. It requires only a pre-calculated pairwise dissimilarity matrix as input. Finally, the flexibility afforded by being able to modify the dissimilarity measure enables data which are not strictly continuous to be clustered. In other words, \(K\)-Medoids is applicable in cases where the mean is undefined. As examples, one could use the Manhattan or general Minkowski distances as alternatives for clustering continuous data, the Hamming [41], Jaccard [42], or Sørensen-Dice [43, 44] distances for clustering binary data, or the Gower distance [45] for clustering mixed-type data with both continuous and categorical features. The closely related \(K\)-Modes algorithm [46] has also been proposed specifically for purely categorical data applications, as well as the \(K\)-Prototypes algorithm [47] for mixed-type variables applications; neither will considered further in this chapter). As their names suggest, they again redefine the notion of a centroid but otherwise proceed much like \(K\)-Means and \(K\)-Medoids.

The function pam() in the cluster library in R provides an implementation of \(K\)-Medoids, with options for implementing many recent additional speed improvements and improved initialisation strategies [48]. We will discuss these in the \(K\)-Medoids portion of the later R tutorial in Section 8.4.2.2. Most dissimilarity measures we will use are implement in the base-R function dist(), with the exception of the Gower distance which is implemented in the daisy() function in the cluster library.

3.2 Agglomerative hierarchical clustering

Hierarchical clustering is another versatile and widely-used dissimilarity-based clustering paradigm. Though also dissimilarity-based, hierarchical clustering differs from partitional clustering methods like \(K\)-Means and \(K\)-Medoids in that it typically doesn’t avail of the notion of computing distances to a central prototype, be that a centroid mean vector or a medoid, but instead greedily builds a hierarchy of clusters based on dissimilarities between observations themselves and sets of observations. Consequently, a hierarchical clustering solution provides a set of partitions, from a single cluster to as many clusters as observations, rather than the single partition obtained by \(K\)-Means and \(K\)-Medoids. The results of a hierarchical clustering are usually presented in the form of a dendrogram visualisation, which illustrates the arrangement of the set of partitions visited and can help guide the decision of the optimal single clustering partition to extract. However, hierarchical clustering shares some of the advantages \(K\)-Medoids has over \(K\)-Means. Firstly, any valid measure of distance can be used, so it is not restricted to squared Euclidean distances and not restricted to clustering purely continuous data. Secondly, hierarchical clustering algorithms do not require the data set itself as input; all that is required is a matrix of pairwise distances.

Broadly speaking, there are two categories of hierarchical clustering:

Agglomerative: Starting from the bottom of the hierarchy, begin with each observation in a cluster of its own and successively merged pairs of clusters while moving up the hierarchy, until all observations are in one cluster. This approach is sometimes referred to as agglomerative nesting (AGNES; [6]).

Divisive: Starting from the top of the hierarchy, with all observations in one cluster, recursively split clusters while moving down the hierarchy, until all observations are in a cluster of their own. This approach is sometimes referred to as divisive analysis (DIANA; [49]).

However, divisive clustering algorithms such as DIANA are much more computationally onerous for even moderately large data sets. Thus, we focus here on the agglomerative variant of hierarchical clustering, AGNES, which is is implemented in both the agnes() function in the cluster library and the hclust() function in base R. We adopt the latter in the hierarchical clustering portion of the R tutorial in Section 8.4.2.3. That being said, even agglomerative hierarchical clustering has significant computation and memory burdens when \(n\) is large [50, 51].

There are three key decisions practitioners must make when employing agglomerative hierarchical clustering. The first of these, the distance measure, has already been discussed in the context of \(K\)-Medoids. We now discuss the other two in turn; namely, the so-called linkage criterion for quantifying the distances between merged clusters as the algorithm moves up the hierarchy, and the criterion used for cutting the resulting dendrogram to produce a single partition.

3.2.1 Linkage criteria

Agglomerative hierarchical clustering employs two different notions of dissimilarity. There is the distance measure, \(d\), such as Euclidean, Manhattan, or Gower distance, which is used to quantify the distance between pairs of single observations in the data set. Different choices of distance measure can lead to markedly different clustering results and it is thus common to run the hierarchical clustering algorithm with different choices of distance measure and compare the results. However, in the agglomerative setting, individual observations are successively merged into clusters. In order to decide which clusters should be combined, it is necessary to quantify the dissimilarity of sets of observations as a function of the pairwise distances of observations in the sets. This gives rise to the notion of a linkage criterion. At each step, the two clusters separated by the shortest distance are combined; the linkage criteria is precisely the definition of ‘shortest distance’ which differentiates different agglomerative approaches. Again, the choice of linkage criterion can have a substantial impact on the result of the clustering so multiple solutions with different combinations of distance measure and linkage criterion should be evaluated.

There are a number of commonly-used linkage criteria which we now describe. A non-exhaustive list of such linkages follows —only those which we use in the later R tutorial in Section 8.4— in which we let \(\mathcal{A}\) and \(\mathcal{B}\) denote two sets of observations, \(\lvert\cdot\rvert\) denote the cardinality of a set, and \(d(a, b)\) denote the distance between observations in those corresponding sets according to the chosen distance measure. We note that ties for the maximum or minimum distances for complete linkage and single linkage, respectively, are broken at random.

Complete linkage: Define the dissimilarity between two clusters as the distance between the two elements (one in each cluster) which are furthest away from each other according to the chosen distance measure \(d\): \[\max_{a \in \mathcal{A}, b \in \mathcal{B}} d(a, b).\]

Single linkage: Define the dissimilarity between two clusters as the distance between the two elements (one in each cluster) which are closest to each other according to the chosen distance measure \(d\): \[\min_{a \in \mathcal{A}, b \in \mathcal{B}} d(a, b).\]

Average linkage: Define the dissimilarity between two clusters as the average distance according to the chosen distance measure \(d\) between all pairs of elements (on in each cluster): \[\frac{1}{\lvert\mathcal{A}\rvert \times \lvert\mathcal{B}\rvert}\sum_{a \in \mathcal{A}}\sum_{b \in \mathcal{B}}d_(a, b).\]

Centroid linkage: Define the dissimilarity between two clusters \(\mathcal{A}\) and \(\mathcal{B}\) as the distance, according to the chosen distance measure \(d\), between their corresponding centroid vectors \(\boldsymbol{\mu}_{\mathcal{A}}\) and \(\boldsymbol{\mu}_{\mathcal{B}}\): \[d(\boldsymbol{\mu}_{\mathcal{A}}, \boldsymbol{\mu}_{\mathcal{B}}).\]

Ward linkage [52]: Instead of measuring the dissimilarity between clusters directly, define the dissimilarity as the cost of merging two clusters as the increase in total within-cluster variance after merging. In other words, minimise the total within-cluster sum-of-squares by finding the pair of clusters at each step which leads to minimum increase in total within-cluster variance after merging, where \(\mathcal{A} \cup \mathcal{B}\) denotes the cluster obtained after merging, with corresponding centroid \(\boldsymbol{\mu}_{\mathcal{A}\cup\mathcal{B}}\): \[\frac{\lvert\mathcal{A}\rvert \times \lvert \mathcal{B}}{\lvert \mathcal{A} \cup \mathcal{B}\rvert}\lVert\boldsymbol{\mu}_{\mathcal{A}} - \boldsymbol{\mu}_{\mathcal{B}}\rVert_2^2 = \sum_{x\in \mathcal{A}\cup\mathcal{B}}\lVert x - \boldsymbol{\mu}_{\mathcal{A}\cup\mathcal{B}}\rVert_2^2 - \sum_{x \in \mathcal{A}}\lVert x - \boldsymbol{\mu}_{\mathcal{A}}\rVert_2^2 - \sum_{x \in \mathcal{B}}\lVert x - \boldsymbol{\mu}_{\mathcal{B}}\rVert_2^2.\]

The Ward and centroid linkage criteria differ from the other linkage criteria in that they are typically meant to be used only when the initial pairwise distances between observations are squared Euclidean distances. All of the above linkage criteria are implemented in the function hclust(), which is available in R without requiring any add-on libraries to be loaded and specifically performs agglomerative hierarchical clustering. Its main arguments are d, a pre-computed pairwise dissimilarity matrix (as can be created from the function dist()), and method, which specifies the linkage criterion (e.g., "complete", "single", "average", and "centroid"). Special care must be taken when employing Ward’s linkage criterion as two options are available: "ward.D", which assumes that the initial pairwise distance matrix already consists of squared Euclidean distances, and "ward.D2", which assumes the distances are merely Euclidean distances and performs the squaring internally [53].

3.2.2 Cutting the dendrogram

One might notice that when calling hclust(), the number of clusters \(K\) is not specified in advance, as it is when calling kmeans() or pam(). Instead, hclust() returns an object which describes the hierarchy of the tree produced by the clustering process. A visualisation of such a tree is referred to as a dendrogram, which can be thought of as a representation of a set of candidate partitions. In a dendrogram representation of an agglomerative hierarchical clustering solution, each observation is initially in a singleton cluster on its own, along the x-axis, according to their similarities. Thereafter, each observation, and subsequently each set of observations, are merged along the y-axis in a nested fashion. The scale along the y-axis is proportional to the distance, according to the chosen linkage criterion, at which two clusters are combined. In the end, the groups formed towards the bottom of the graph are close together, whereas those at the top of the graph are far apart.

Obtaining a single hard partition of objects into disjoint clusters is obtained by cutting the dendrogram horizontally at the corresponding height. In other words, observations are allocated to clusters by cutting the tree at an appropriate height. Generally, the lower this height, the greater the number of clusters (theoretically, there can be as many clusters as there are observations, \(n\)), while the greater the height, the lower the number of clusters (theoretically, there can be as few as only one cluster, corresponding to no group structure in the data). Thus, an advantage of hierarchical clustering is that the user need not know \(K\) in advance; the user can manually select \(K\) after the fact by examining the constructed tree and fine-tuning the output to find clusters with a desired level of granularity. There is no universally applicable rule for determining the optimal height at which to cut the tree, but it is common to select a height in the region where there is the largest gap between merges, i.e., where there is a relatively wide range of distances over which the number of clusters in the resulting partition does not change. This is, of course, very much guided by the visualisation itself.

In R, one can visualise the dendrogram associated with a particular choice of distance measure and linkage criterion by calling plot() on the output from hclust(). Thereafter, the function cutree() can be used to obtain a single partition. This function takes the arguments tree, which is the result of a call to hclust(), h which is the height at which the tree should be cut, and k which more directly allows the desired number of clusters to be produced. Specifying k finds the corresponding height which yields k clusters and overrides the specification of h.

However, it is often the case that certain combinations of dissimilarity measure and linkage criterion produce undesirable dendrograms. In particular, complete linkage is known to perform poorly in the presence of outliers, given its reliance on maximum distances, and single linkage is known to produce a “chaining” effect on the resulting dendrogram, whereby, due to its reliance on minimum distances, observations tend to continuously join increasingly larger, existing clusters rather than being merged with other observations to form new clusters. A negative consequence of this phenomenon is lack of cohesion: observations at opposite ends of the same cluster in a dendrogram could be quite dissimilar. These limitations can also be attributed to hierarchical clustering —regardless of the linkage employed— optimising a local criterion for each merge, unlike \(K\)-Means and \(K\)-Medoids which endeavour to optimise global objectives.

3.3 Choosing the number of clusters

Determining the number of clusters in a data set is a fraught task. Throughout Section 8.3.1 and Section 8.3.2, method-specific strategies for guiding the choice of \(K\) were presented. However, they are not without their limitations. For \(K\)-Means and \(K\)-Medoids, the elbow method is somewhat subjective and unreliable. Often, the presence of an elbow is not so clear at a single value of \(K\). Likewise, for agglomerative hierarchical clustering, choosing a height at which to cut the dendrogram as the criterion for choosing \(K\) has also been criticised as an overly subjective method. Moreover, these strategies are only capable of identifying the best \(K\) value conditional on the chosen method and do not help to identify the overall best solution among multiple competing methods. Moreover, we are required to choose more than just the optimal \(K\) value when using \(K\)-Medoids (for which different solutions with different dissimilarity measures and different \(K\) values can be obtained) and agglomerative hierarchical clustering (for which different solutions can be obtained using different dissimilarity measures and linkage criteria).

Overcoming these ambiguities and identifying a more general strategy for comparing the quality of clustering partitions is a difficult task for which many criteria have been proposed. Broadly speaking, cluster quality measures fall into two categories:

Comparing of the uncovered partition to a reference clustering (or known grouping labels).

Measuring of internal cluster consistency without reference to ground truth labels.

The first is typically conducted using the Rand index [54] or adjusted Rand index [55], which measure the agreement between two sets of partitions. However, as we will be exploring clustering in exploratory, unsupervised settings, using data for which there is no assumed “true” group structure, we will instead focus on a quality measure of the latter kind. As previously stated, a large number of such criteria have been proposed in the literature: several are summarised in Table 7 of Chapter 10 of this book [18], where they are used to guide the choice of \(K\) for agglomerative hierarchical clustering in the context of sequence analysis. Here, however, for the sake of brevity, we describe only one commonly used criterion which we later employ in the R tutorial in Section 8.4 —which is itself a dissimilarity-based measure and is thus universally applicable to all clustering algorithms we employ— even if in most applications it would be wise to inform the choice of \(K\) with several such quantitative criteria. Moreover, the practitioner’s own subject matter expertise and assessment of the interpretability of the obtained clusters should also be used to inform the choice of \(K\).

The quantitative criterion we employ is referred to as the average silhouette width (ASW) criterion [56], which is routinely used to assess the cohesion and separation of the clusters uncovered by dissimilarity-based methods. Cohesion refers to the tendency to group similar objects together and separation refers to the tendency to group dissimilar objects apart in non-overlapping clusters. As the name implies, the ASW is computed as the average of observation-specific silhouette widths. Under the assumption that \(K>1\), silhouette widths and the ASW criterion are calculated as follows:

Let \(a(i)\) be the average dissimilarity from observation \(i\) to the other members of the same cluster to which observation \(i\) is assigned.

Compute the average dissimilarity from observation \(i\) to the members of all \(K-1\) other clusters: let \(b(i)\) be the minimum such distance computed.

The silhouette for observation \(i\) is then defined to be \[\begin{align*} s(i) &= \frac{b(i) - a(i)}{\max\{a(i), b(i)\}}\\ &= \begin{cases} 1 - \frac{a(i)}{b(i)} & \mbox{if}\:a(i) < b(i)\\ 0& \mbox{if}\:a(i)=b(i)\\ \frac{b(i)}{a(i)} - 1 & \mbox{if}\:a(i) > b(i), \end{cases} \end{align*}\] unless observation \(i\) is assigned to a cluster of size \(1\), in which case \(s(i)=0\). Notably, \(a(i)\) and \(b(i)\) need not be calculated using the same dissimilarity measure with which the data were clustered; it is common to adopt the Euclidean distance.

Define the ASW for a given partition \(\mathcal{C}\) as: \(\mbox{ASW}\left(\mathcal{C}\right)=\frac{1}{n}\sum_{i=1}^n s_i.\)

Given that \(-1 \le s(i) \le 1\), the interpretation of \(s(i)\) is that a silhouette close to \(1\) indicates that the observation has been well-clustered, a silhouette close to \(-1\) indicates that the observation would be more appropriately assigned to another cluster, and a silhouette close to zero indicates that the observation lies on the boundary of two natural clusters. If most values of \(s(i)\) are high, then the clustering solution can be deemed appropriate. This occurs when \(a(i) \ll b(i)\), meaning that observation \(i\) must be well-matched its own cluster and poorly-matched to all other clusters. Conversely, if most \(s(i)\) values are low or even negative, this provides evidence that \(K\) may be too low or too high.

The values of \(s(i)\) can be averaged over all observations assigned to the same cluster, as a measure of how tightly grouped the observations in the given cluster are. The ASW criterion itself is simply the mean silhouette width over all observations in the entire data set. Generally, clustering solutions with higher ASW are to be preferred, however it is also prudent to dismiss solutions with many negative silhouettes or particularly low cluster-specific mean silhouettes. A silhouette plot can help in this regard; such a plot depicts all values of \(s(i)\), grouped according to the corresponding cluster and in decreasing order within a cluster. In the R tutorial which follows, ASW values and silhouette plots will guide the choice of an optimal clustering solution in a comparison of multiple methods in Section 8.4.2.4.

4 Tutorial with R

In this section, we will learn how to perform clustering using the R programming language [7], using all methods described throughout Section 8.3. We start by loading the necessary libraries. We will use cluster [36] chiefly for functions related to \(K\)-Medoids and silhouettes. As per other chapters in this book, we use tidyverse [57] for data manipulation and rio [58] for downloading the data: see Section 12.4.3 for details the on the data pre-processing steps employed.

library(cluster)

library(rio)

library(tidyverse)We note that hierarchical clustering and \(K\)-Means are implemented in base R and thus no dedicated libraries need to be loaded.

4.1 The data set

Our case study will be to identify different groups of participants that have a similar role in the discussion forum of a massive open online course (MOOC) for teachers. For that purpose, we will rely on the centrality measures of the participants which indicate their number of contributions (OutDegree), replies (InDegree), position in the network (Closeness_total), worth of their connections (Eigen), spread of their ideas (Diffusion_degree), and more. For more details about the data set, please refer to the data chapter of the book (Chapter 2; [59]). To learn more about centrality measures and how to calculate them, refer to the social network analysis chapter (Chapter 15; [60]). We will henceforth refer to these data as “the MOOC centralities data set”. We can download and preview the data with the following commands:

URL <- "https://github.com/lamethods/data/raw/main/6_snaMOOC/"

df <- import(paste0(URL, "Centralities.csv"))

dfAdditionally, a number of categorical variables pertaining to demographic characteristics are available for the same participants. Again, please refer to the data chapter of the book (Chapter 2; [59]) for more details on these variables. With an appropriate distance measure, namely the Gower distance measure, we will incorporate some of these variables in our applications of \(K\)-Medoids and agglomerative hierarchical clustering (but not \(K\)-Means) in addition to the continuous variables contained in df, largely for the purpose of demonstrating clustering methods which are capable of clustering variables of mixed type. We can download and preview the auxiliary categorical data set with the commands below. Note that we select only the following variables:

experience(coded as a level of experience, 1–3),gender(female or male),region(Midwest, Northeast, South, and West U.S.A., along with International),

for the sake of simplifying the demonstration of mixed-type variables clustering and reducing the computational burden. We also extract the UID column, a user ID which corresponds to the name column in df, which will be required for later merging these two data sets. We also ensure that all but this leading UID column is formatted as a factor.

demog <- import(paste0(URL, "DLT1%20Nodes.csv"))

demog <- demog |>

select(UID, experience, gender, region) |>

mutate_at(vars(-UID), as.factor)

demog4.1.1 Pre-processing the data

In df, the first column (name) is the student identifier, and the remaining columns are each of the centrality measures calculated from students’ interactions in the MOOC forum. We will eventually discard the name column from future analyses; we retain it for the time being so that df and demog can be merged appropriately. The data also contain a small number of observations —only three rows of df— with missing values for the variable Closeness_total, as seen by

df |> is.na() |> which(arr.ind=TRUE) row col

441 441 4

442 442 4

443 443 4Given that none of the clustering methods described in this chapter are capable of accommodating missing data, we remove these three observations for future analyses too. Furthermore, one of the rows in demog has NULL recorded for the gender variable. We remove all invalid rows from both df and demog. The function complete.cases() constructs a completely observed data set by extracting the rows which contain one or more missing values and we augment the index of fully observed rows with an index of non-NULL gender values. Finally, we drop the redundant NULL level from the factor variable gender.

obs_ind <- complete.cases(df) & demog$gender != "NULL"

df$name[!obs_ind] # indices of observations with missing values[1] 439 441 442 443df <- df |> filter(obs_ind)

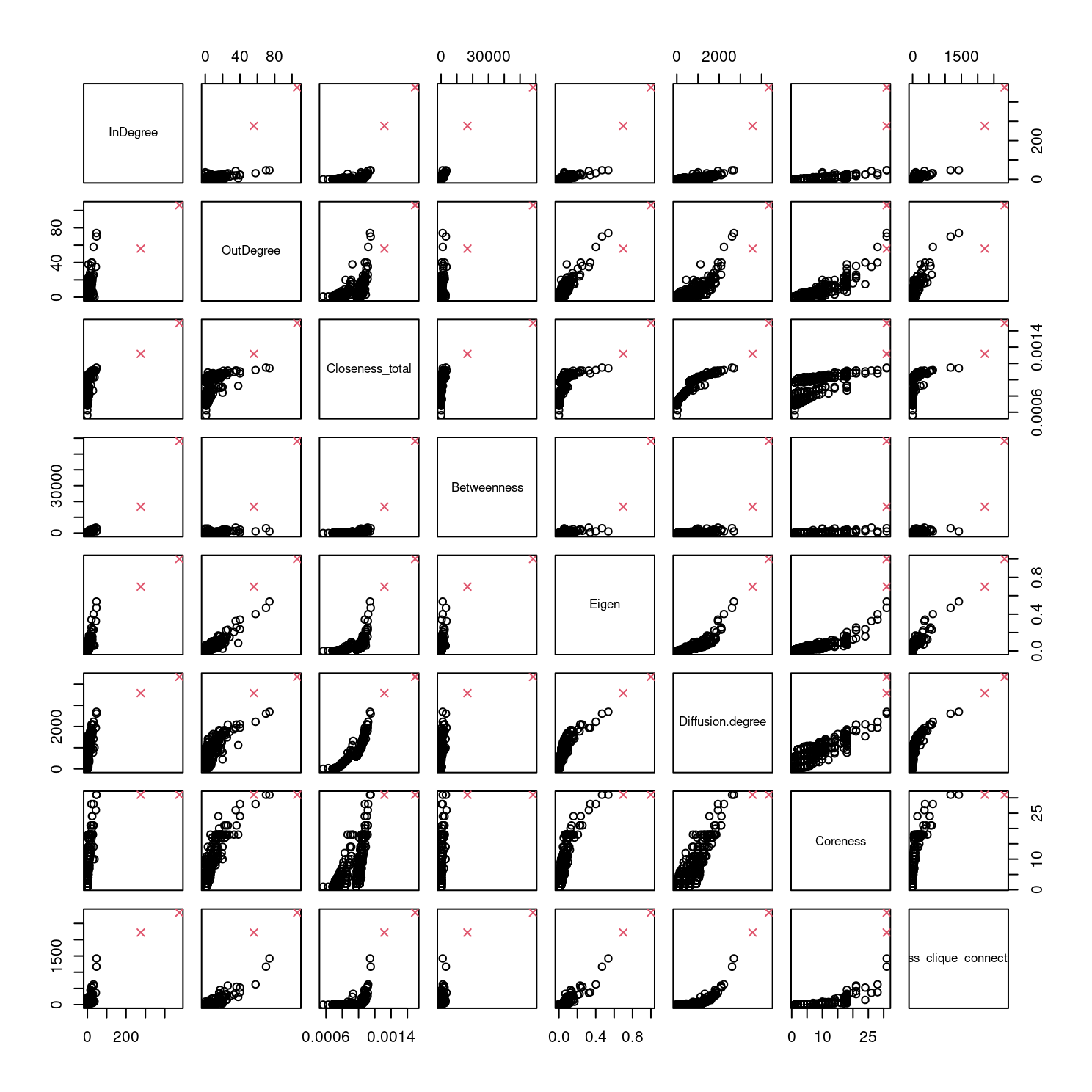

demog <- demog |> filter(obs_ind) |> droplevels()Before proceeding any further, it would be prudent to explore the complete data visually, which we do via the matrix of pairwise scatter plots, excluding the name column, in Figure 8.1:

pairs(df[,-1])

From the plots in Figure 8.1, we can see that there are clearly two quite extreme outliers. Simple exploratory analyses (not shown) confirms that these are the final two rows of the complete data set. These observations are known to correspond to the two instructors who led the discussion. They have been marked using a red cross symbol in Figure 8.1. Though we have argued that \(K\)-Medoids is a more robust clustering method than \(K\)-Means, for example, we also remove these observations in order to avoid their detrimental effects on the \(K\)-Means output. The rows must be removed from both df and demog so that they can later be merged. With these observations included, \(K\)-Means for instance would likely add one additional cluster containing just these two observations, about whom we already know their role differs substantially from the other observations, as they are quite far from the bulk of the data in terms of squared Euclidean distance. That is not to say, however, that there will not still be outliers in df after removing these two cases.

keep_ind <- 1:(nrow(df) - 2)

df <- df |> slice(keep_ind)

demog <- demog |> slice(keep_ind)As is good practice when using dissimilarity-based clustering algorithms, we pre-process the purely continuous data by normalising each variable to have a mean of 0 and a variance of 1, by constructing the scaled data frame sdf using the function scale(), again excluding the name column.

sdf <- scale(df[,-1], center=TRUE, scale=TRUE)The object sdf can be used for all clustering methods described herein. To also accommodate the categorical demographic variables, we use merge() to combine both the scaled continuous data and the categorical data. This requires some manipulation of the name column, with which the two data sets are merged, but we ultimately discard the superfluous name column, which we do not want to contribute to any pairwise distance matrices or clustering solutions, from both merged_df and sdf.

merged_df <- data.frame(name=df$name, sdf) |>

merge(demog, by=1) |>

select(-name)Finally, before proceeding to apply various clustering methods to these data, we present summaries of the counts of each level of the categorical variables experience, gender, and region. These variables imply groupings of size three, two, and five, respectively, and it will be interesting to see if this is borne out in any of the mixed-type clustering applications.

table(merged_df$experience)

1 2 3

118 150 171 table(merged_df$gender)

female male

299 140 table(merged_df$region)

International Midwest Northeast South West

32 77 110 168 52 4.2 Clustering applications

We now show how each of the clustering methods described above can be implemented in R, using these data throughout and taking care to address all practical concerns previously raised. For each method —\(K\)-Means in Section 8.4.2.1, \(K\)-Medoids in Section 8.4.2.2, and agglomerative hierarchical clustering in Section 8.4.2.3— we show clustering results following the method-specific guidelines for choosing \(K\). However, we conclude by comparing results across different methods using the average silhouette width criterion to further guide the choice of \(K\) in Section 8.4.2.4. We refrain from providing an interpretation of the uncovered clusters until Section 8.4.2.5, after the optimal clustering solution is identified.

Before we proceed, we set the seed to ensure that results relying on random number generation are reproducible.

set.seed(2024)4.2.1 \(K\)-Means application

We begin by showing a naive use of the kmeans() function, supplying only the scaled data sdf and the pre-specified number of clusters \(K\) via the centers argument. For now, we assume for no particular reason that there are \(K=3\) clusters, just to demonstrate the use of the kmeans() function and its arguments. A number of aspects of the results are printed when we examine the resulting km1 object.

km1 <- kmeans(sdf, centers=3)

km1K-means clustering with 3 clusters of sizes 48, 129, 262

Cluster means:

InDegree OutDegree Closeness_total Betweenness Eigen

1 2.2941694 1.9432559 1.0923551 1.9697769 2.00747791

2 -0.4088814 -0.4311073 -1.4086380 -0.3975783 -0.55451137

3 -0.2189864 -0.1437536 0.4934399 -0.1651209 -0.09475944

Diffusion.degree Coreness Cross_clique_connectivity

1 1.9078646 2.1923592 2.0066265

2 -1.1038258 -0.5622732 -0.3094092

3 0.1939543 -0.1248092 -0.2152835

Clustering vector:

[1] 1 2 2 3 1 1 1 1 3 1 1 3 1 1 1 2 1 3 1 2 2 1 2 1 3 1 1 2 1 1 2 3 3 1 1 1 2

[38] 3 3 2 1 3 2 1 3 3 2 3 1 1 3 3 1 1 2 3 3 1 3 1 1 1 1 1 3 3 1 1 3 3 3 3 2 3

[75] 3 3 3 3 2 2 3 3 3 2 3 2 3 1 2 3 3 1 2 3 3 3 2 1 3 1 3 3 3 3 3 2 3 2 3 3 2

[112] 3 3 3 1 1 2 2 2 3 3 2 2 3 2 3 3 3 3 2 3 2 3 3 2 3 1 3 3 2 3 3 3 3 2 3 3 3

[149] 2 3 2 3 2 3 3 2 3 3 2 3 3 3 3 2 3 3 3 2 3 3 3 3 3 2 2 3 3 3 3 2 3 2 3 3 3

[186] 3 3 3 3 3 2 3 3 3 3 3 2 1 3 3 3 3 3 3 3 3 3 2 2 2 3 3 2 3 2 2 3 3 1 2 3 2

[223] 1 2 2 3 2 2 2 2 2 3 2 1 3 3 2 2 3 3 3 3 3 2 3 3 3 3 3 3 3 3 3 2 3 3 3 3 3

[260] 2 3 2 2 2 3 2 3 2 2 2 3 2 2 2 2 3 2 3 3 3 3 2 2 3 2 2 2 2 2 2 2 3 2 2 3 2

[297] 3 3 3 3 3 3 3 3 3 2 2 3 2 1 2 2 2 3 2 2 3 3 2 3 2 3 2 3 3 3 2 2 3 3 3 2 3

[334] 2 3 3 3 3 3 3 3 3 3 3 3 3 3 2 2 3 2 2 2 3 3 3 3 3 2 3 3 3 3 3 3 3 3 3 3 2

[371] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

[408] 3 3 3 3 3 3 3 3 2 2 2 2 2 2 2 2 3 3 3 3 3 3 3 3 1 2 2 2 2 2 2 3

Within cluster sum of squares by cluster:

[1] 858.90295 72.01655 414.30502

(between_SS / total_SS = 61.6 %)

Available components:

[1] "cluster" "centers" "totss" "withinss" "tot.withinss"

[6] "betweenss" "size" "iter" "ifault" Among other things, this output shows the estimated centroid vectors \(\boldsymbol{\mu}_1,\ldots,\boldsymbol{\mu}_K\) upon convergence of the algorithm ($centers), an indicator vector showing the assignment of each observation to one of the \(K=3\) clusters ($cluster), the size of each cluster in terms of the number of allocated observations ($size), the within-cluster sum-of-squares per cluster ($withinss), and the ratio of the between-cluster sum-of-squares ($betweenss) to the total sum of squares ($totss). Ideally, this ratio should be large, if the total within-cluster sum-of-squares is minimised. We can access the TWCSS quantity by typing

km1$tot.withinss[1] 1345.225However, these results were obtained using the default values of ten maximum iterations (iter.max=10, by default) and only one random set of initial centroid vectors (nstart=1, by default). To increase the likelihood of converging to the global minimum, it is prudent to increase iter.max and nstart, to avoid having the algorithm terminate prematurely and avoid converging to a local minimum, as discussed in Section 8.3.1.2.2. We use nstart=50, which is reasonably high but not so high as to incur too large a computational burden.

km2 <- kmeans(sdf, centers=3, nstart=50, iter.max=100)

km2$tot.withinss[1] 1339.226We can see that the solution associated with the best random starting values achieves a lower TWCSS than the earlier naive attempt. Next, we see if an even lower value can be obtained using the \(K\)-Means initialisation strategy, by invoking the kmeans_pp() function from Section 8.3.1.2.2 with K=3 centers and supplying these centroid vectors directly to kmeans(), rather than indicating the number of random centers to use.

km3 <- kmeans(sdf, centers=kmeans_pp(sdf, K=3), iter.max=100)

km3$tot.withinss[1] 1343.734In this case, using the \(K\)-Means initialisation strategy did not further reduce the TWCSS; in fact it is worse than the solution obtained using nstart=50. However, recall that \(K\)-Means is itself subject to randomness and should therefore also be run several times, though the number of \(K\)-Means runs need not be so high as 50. In the code below, we use replicate() to invoke both kmeans_pp() and kmeans() itself ten times in order to obtain a better solution.

KMPP <- replicate(10, list(kmeans(sdf, iter.max=100,

centers=kmeans_pp(sdf, K=3))))Among these ten solutions, five are identical and achieve the same minimum TWCSS value.

TWCSS <- sapply(KMPP, "[[", "tot.withinss")

TWCSS [1] 1345.225 1339.226 1339.226 1339.226 1340.457 1339.226 1345.225 1345.225

[9] 1345.225 1339.226Thereafter, we can extract a solution which minimises the tot.withinss as follows:

km4 <- KMPP[[which.min(TWCSS)]]

km4$tot.withinss[1] 1339.226Finally, this approach resulted in an identical solution to km2 being obtained — with just ten runs of \(K\)-Means and \(K\)-Means rather than nstart=50 runs of the \(K\)-Means algorithm alone— which is indeed superior to the solution obtained with just one uninformed random start in km1. We will thus henceforth adopt this initialisation strategy always.

To date, the \(K\)-Means algorithm has only been ran with the fixed number of clusters \(K=3\), which may be suboptimal. The following code iterates over a range of \(K\) values, storing both the kmeans() output itself and the TWCSS value for each \(K\). The range \(K=1,\ldots,10\) notably includes \(K=1\), corresponding to no group structure in the data. The reason for storing the kmeans() output itself is to avoid having to run kmeans() again after determining the optimal \(K\) value; such a subsequent run may not converge to the same minimised TWCSS and having to run the algorithm again would be computationally wasteful.

K <- 10 # set upper limit on range of K values

TWCSS <- numeric(K) # allocate space for TWCSS estimates

KM <- list() # allocate space for kmeans() output

for(k in 1:K) { # loop over k=1,...,K

# Run K-means using K-Means++ initialisation:

# use the current k value and do so ten times if k > 1

KMPP <- replicate(ifelse(k > 1, 10, 1),

list(kmeans(sdf, iter.max=100,

centers=kmeans_pp(sdf, K=k))))

# Extract and store the solution which minimises the TWCSS

KM[[k]] <- KMPP[[which.min(sapply(KMPP, "[[", "tot.withinss"))]]

# Extract the TWCSS value for the current value of k

TWCSS[k] <- KM[[k]]$tot.withinss

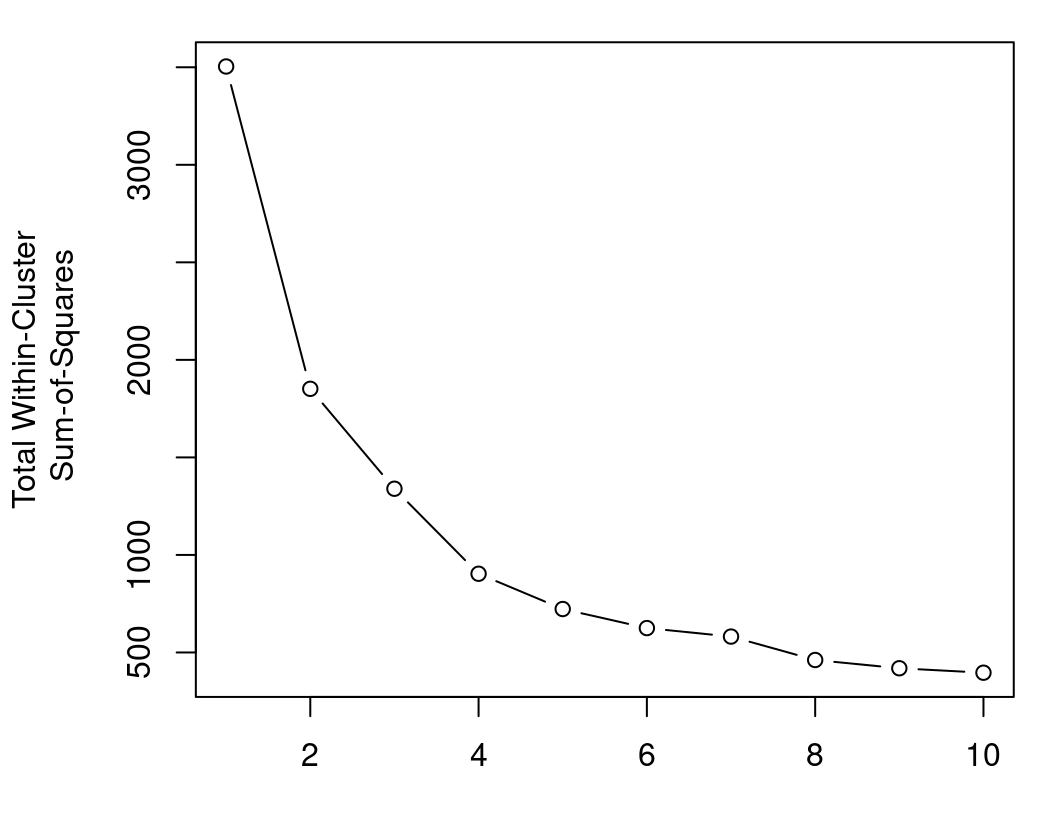

}As previously stated in Section 8.3.1.2.1, the so-called “elbow method” consists of plotting the range of \(K\) values on the x-axis against the corresponding obtained TWCSS values on the y-axis and looking for an kink in the resulting curve.

plot(x=1:K, y=TWCSS, type="b",

xlab="K", ylab="Total Within-Cluster\n Sum-of-Squares")

Here, it appears that \(K=4\) would produce the best results: beyond \(K=4\), the decrease in TWCSS is minimal, which suggests that an extra cluster is not required to model the data well; between \(K=3\) and \(K=4\), there is a more substantial decrease in TWCSS, which suggests that the fourth cluster is necessary. This method is of course highly subjective, and we will further inform our choice of \(K\) for \(K\)-Means using silhouette widths and the ASW criterion in Section 8.4.2.4.

For now, we can interrogate the \(K\)-Means solution with \(K=4\) by examining the sizes of the clusters and their centroid vectors, using the corresponding fourth element of the list KM by setting K <- 4.

K <- 4

KM[[K]]$size[1] 127 57 8 247KM[[K]]$centers InDegree OutDegree Closeness_total Betweenness Eigen Diffusion.degree

1 -0.4086612 -0.4381057 -1.4220978 -0.3994123 -0.5596480 -1.1138889

2 1.5706416 1.1372352 0.9247804 1.3859592 1.0781087 1.4216179

3 4.1035975 5.0512503 1.5201941 3.4673658 5.4946785 3.1631065

4 -0.2852445 -0.2007813 0.4685522 -0.2267743 -0.1390054 0.1422138

Coreness Cross_clique_connectivity

1 -0.5672245 -0.3102236

2 1.7423739 0.9615041

3 3.4821417 5.5033583

4 -0.2232184 -0.2406243However, these centroids correspond to the scaled version of the data created in Section 12.4.3. Interpretation can be made more straightforward by undoing the scaling transformation on these centroids, so that they are on the same scale as the data df rather than the scaled data sdf which was used as input to kmeans(). The column-wise means and standard deviations used when scale() was invoked are available as the attributes "scaled:center" and "scaled:scale", respectively and are used in the code below. We show these centroids rounded to four decimal places in Table 8.1.

rescaled_centroids <- t(apply(KM[[K]]$centers, 1, function(r) {

r * attr(sdf, "scaled:scale") + attr(sdf, "scaled:center")

} ))

round(rescaled_centroids, 4)| InDegree | OutDegree | Closeness_total | Betweenness | Eigen | Diffusion.degree | Coreness | Cross_clique_connectivity |

|---|---|---|---|---|---|---|---|

| 1.1024 | 1.7480 | 0.0008 | 20.7010 | 0.0071 | 144.1732 | 2.6378 | 4.6850 |

| 15.3684 | 14.8421 | 0.0010 | 849.9328 | 0.0996 | 1370.1053 | 16.1053 | 157.5789 |

| 33.6250 | 47.3750 | 0.0011 | 1816.6608 | 0.3492 | 2212.1250 | 26.2500 | 703.6250 |

| 1.9919 | 3.7206 | 0.0010 | 100.8842 | 0.0308 | 751.5061 | 4.6437 | 13.0526 |

Now, we can more clearly see that the first cluster, with \(n_{k=1}=127\) observations, has the lowest mean value for all \(d=8\) centrality measures, while the last cluster, the largest with \(n_{k=4}=247\) observations, has moderately larger values for all centrality measures. The two smaller clusters, cluster two with \(n_{k=2}=57\) observations and cluster three with only \(n_{k=3}=8\) observations have the second-largest and largest values for each measure, respectively. As previously stated, we defer a more-detailed interpretation of uncovered clusters to Section 8.4.2.5, after the optimal clustering solution has been identified.

4.2.2 \(K\)-Medoids application

The function pam() in the cluster library implements the PAM algorithm for \(K\)-Medoids clustering. By default, this function requires only the arguments x (a pre-computed pairwise dissimilarity matrix, as can be created from the functions dist(), daisy(), and more) and k, the number of clusters. However, there are many options for many additional speed improvements and initialisation strategies [48]. Here, we invoke a faster variant of the PAM algorithm which necessitates specification of nstart as a number greater than one, to ensure the algorithm is evaluated with multiple random initial medoid vectors, in a similar fashion to kmeans(). Thus, we call pam() with variant="faster" and nstart=50 throughout.

Firstly though, we need to construct the pairwise dissimilarity matrices to be used as input to the pam() function. Unlike \(K\)-Means, we are not limited to squared Euclidean distances. It is prudent, therefore, to explore \(K\)-Medoids solutions with several different dissimilarity measures and compare the different solutions obtained for different measures. Each distance measure will yield different results at the same \(K\) value, thus the value of \(K\) and the distance measure must be considered as a pair when determining the optimal solution.

We begin by calculating the Euclidean, Manhattan, and Minkowski (with \(p=3\)) distances on the scaled continuous data in sdf:

dist_euclidean <- dist(sdf, method="euclidean")

dist_manhattan <- dist(sdf, method="manhattan")

dist_minkowski3 <- dist(sdf, method="minkowski", p=3)Secondly, we avail of another principal advantage of \(K\)-Medoids; namely, the ability to incorporate categorical variables in mixed-type data sets, by calculating pairwise Gower distances between each row of merged_df, using daisy().

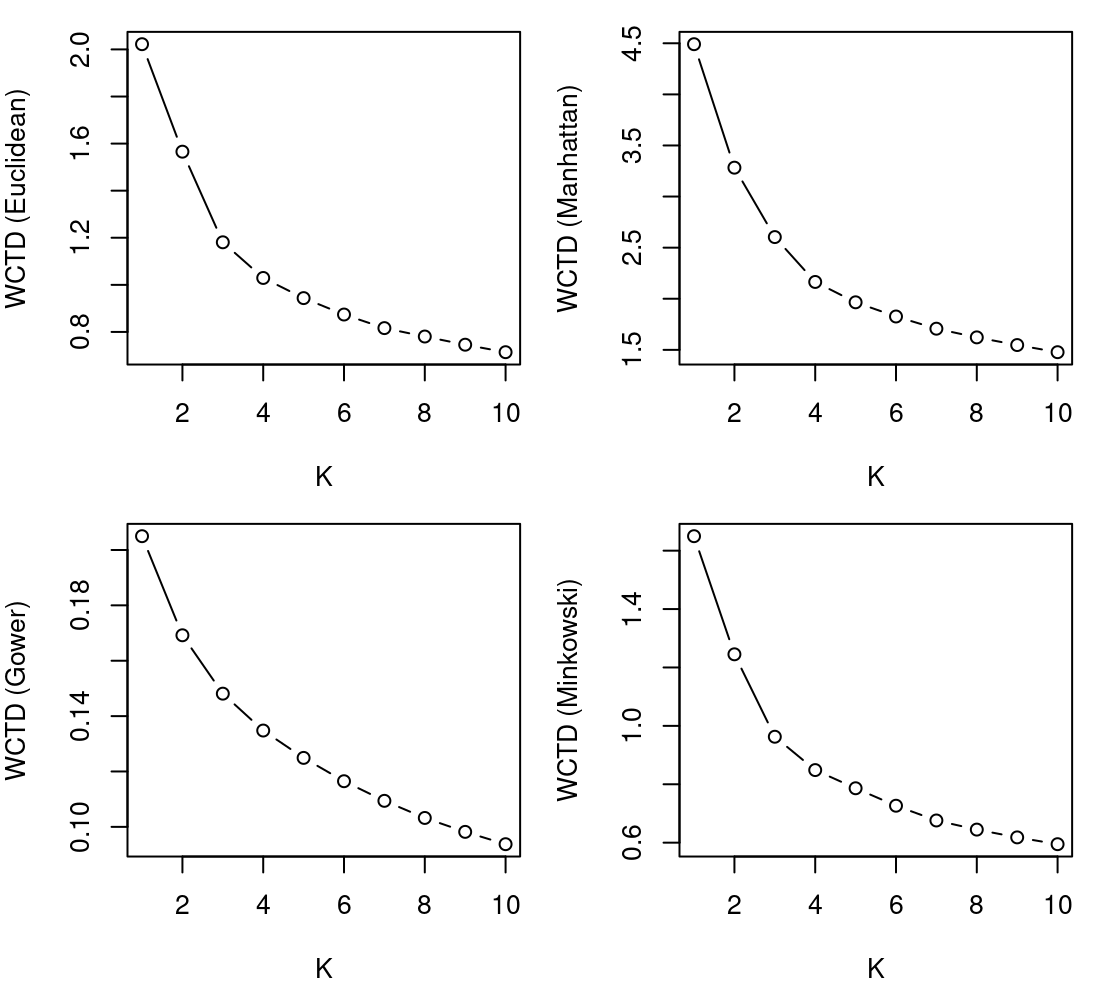

dist_gower <- daisy(merged_df, metric="gower")As per the \(K\)-Means tutorial in Section 8.4.2.1, we can produce an elbow plot by running the algorithm over a range of \(K\) values and extracting the minimised within-cluster total distance achieved upon convergence for each value of \(K\). We demonstrate this for the dist_euclidean input below.

K <- 10

WCTD_euclidean <- numeric(K)

pam_euclidean <- list()

for(k in 1:K) {

pam_euclidean[[k]] <- pam(dist_euclidean, k=k, variant="faster", nstart=50)

WCTD_euclidean[k] <- pam_euclidean[[k]]$objective[2]

}Equivalent code chunks for the dist_manhattan, dist_minkowski3, and dist_gower inputs are almost identical, so we omit them here for the sake of brevity. Suffice to say, equivalent lists pam_manhattan, pam_minkowski3, and pam_gower, as well as equivalent vectors WCTD_manhattan, WCTD_minkowski3, and WCTD_gower, can all be obtained. Using these objects, corresponding elbow plots for all four dissimilarity measures are showcased in Figure 8.3.

Some of the elbow plots in Figure 8.3 are more conclusive than others. As examples, there are reasonably clear elbows at \(K=3\) for the Euclidean and Minkowski distances, arguably an elbow at \(K=4\) for the Manhattan distance, and no clear, unambiguous elbow under the Gower distance. In any case, the elbow method only helps to identify the optimal \(K\) value for a given dissimilarity measure; we defer a discussion of how to choose the overall best \(K\)-Medoids clustering solution to later in this tutorial.

For now, let’s interrogate the \(K=3\) solution obtained using the Euclidean distance. Recall that the results are already stored in the list pam_euclidean, so we merely need to access the third component of that list by setting K <- 3. Firstly, we can examine the size of each cluster by tabulating the cluster-membership indicator vector as follows:

K <- 3

table(pam_euclidean[[K]]$clustering)

1 2 3

67 122 250 Examining the medoids which serve as prototypes of each cluster is rendered difficult by virtue of the input having been a distance matrix rather than the data set itself. Though \(K\)-Medoids defines the medoids to be the rows in the data set from which the distance to all other observations currently allocated to the same cluster, according to the specified distance measure, is minimised, the medoids component of the output instead gives the indices of the medoids within the data set.

pam_euclidean[[K]]$medoids[1] 88 272 45Fortunately, these indices within sdf correspond to the same, unscaled observations within df. Allowing for the fact that observations with name greater than the largest index here were removed due to missingness, they are effectively the values of the name column corresponding to the medoids. Thus, we can easily examine the medoids on their original scale. In Table 8.2, they include the name column, which was not used when calculating the pairwise distance matrices, and are rounded to four decimal places.

df |>

slice(as.numeric(pam_euclidean[[K]]$medoids)) |>

round(4)| name | InDegree | OutDegree | Closeness_total | Betweenness | Eigen | Diffusion.degree | Coreness | Cross_clique_connectivity |

|---|---|---|---|---|---|---|---|---|

| 88 | 16 | 14 | 0.0011 | 675.5726 | 0.1096 | 1415 | 18 | 157 |

| 272 | 1 | 1 | 0.0007 | 0.0000 | 0.0047 | 41 | 2 | 2 |

| 45 | 1 | 2 | 0.0010 | 29.4645 | 0.0194 | 684 | 3 | 5 |

Thus, we can see that there is a small cluster with \(n_{k=1}=67\) observations which has the largest values for all \(d=8\) centrality measures, a slightly larger cluster with \(n_{k=2}=122\) observations and the lowest values for all variables, and the largest cluster with \(n_{k=3}=250\) and intermediate values for all variables. The cluster sizes of the \(K\)-Medoids solution being more evenly balanced than the earlier \(K\)-Means solution is an artefact of \(K\)-Medoids being less susceptible to outlying observations by virtue of not squaring the distances. We can explore this by cross-tabulating the clusters obtained by \(K\)-Means with \(K=4\) and \(K\)-Medoids with \(K=3\) and the Euclidean distance in Table 8.3.

table(pam_euclidean[[3]]$clustering,

KM[[4]]$cluster)| 1 | 2 | 3 | 4 | ||

| 1 | 0 | 57 | 8 | 2 | |

| 2 | 122 | 0 | 0 | 0 | |

| 3 | 5 | 0 | 0 | 245 |

From the cross-tabulation in Table 8.3, we can see that the \(n_k=8\) observations in the smallest \(K\)-Means cluster were absorbed into a larger cluster under the \(K\)-Medoids solution, thereby demonstrating the robustness of \(K\)-Medoids to outliers. Otherwise, both solutions are broadly in agreement.

4.2.3 Agglomerative hierarchical clustering application

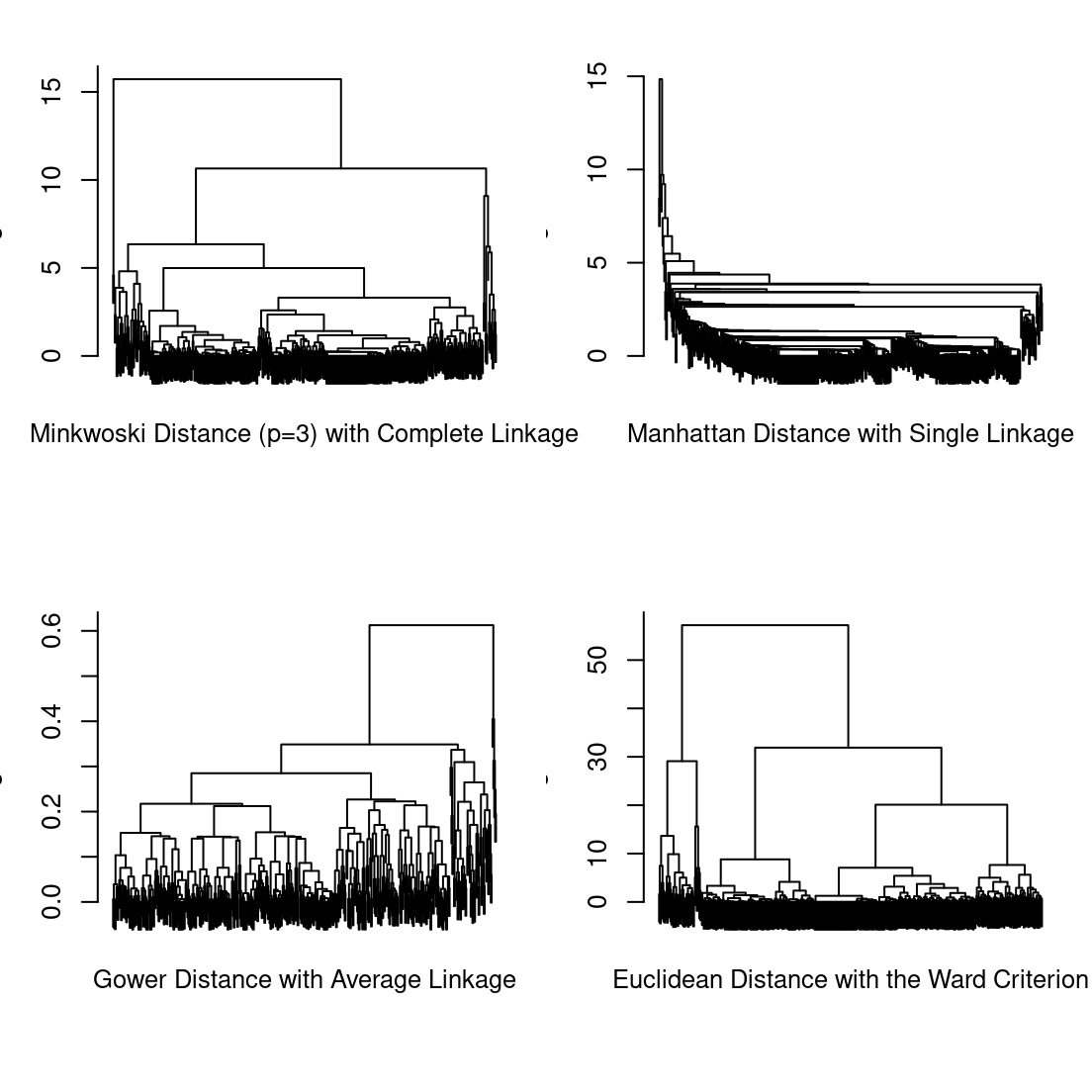

Performing agglomerative hierarchical clustering is straightforward now that the distance matrices have already been created for the purposes of running pam(). All that is required is to specify the distance matrix and an appropriate linkage criterion as the method when calling hclust(). We demonstrate this below for a subset of all possible distance measure and linkage criterion combinations among those described in Section 8.3.2.1. Recall that for the Ward criterion, the underlying distance measure is usually assumed to be squared Euclidean and that "ward.D2" is the correct method to use when the Euclidean distances are not already squared. For method="centroid", we manually square the Euclidean distances.

hc_minkowski3_complete <- hclust(dist_minkowski3, method="complete")

hc_manhattan_single <- hclust(dist_manhattan, method="single")

hc_gower_average <- hclust(dist_gower, method="average")

hc_euclidean_ward <- hclust(dist_euclidean, method="ward.D2")